On Friday, March 29th, OPIM Innovate hosted its Alexa Skill-Building Workshop from 1:00 PM to 3:00 PM in the Gladstein Lab, BUSN 309. Led by Tyler Lauretti (MIS ’18), a Travelers Information Technology Leadership Development Program employee and Alexa developer, the workshop introduced Amazon’s Alexa service through the lens of artificial intelligence and offered attendees the opportunity to develop their own Alexa skills, or voice-activated applications, with a zero-code graphical interface called Voiceflow.

On Friday, March 29th, OPIM Innovate hosted its Alexa Skill-Building Workshop from 1:00 PM to 3:00 PM in the Gladstein Lab, BUSN 309. Led by Tyler Lauretti (MIS ’18), a Travelers Information Technology Leadership Development Program employee and Alexa developer, the workshop introduced Amazon’s Alexa service through the lens of artificial intelligence and offered attendees the opportunity to develop their own Alexa skills, or voice-activated applications, with a zero-code graphical interface called Voiceflow.

Before introducing attendees to Alexa skill building, Lauretti wanted to describe the Alexa with artificial intelligence (A.I.) terminology. “The speech recognition and natural language processing realms [of A.I.] really make up the core of Alexa,” said Lauretti, “but what really makes Alexa and other voice assistants strong and powerful is machine learning.” Alexa, through speech and natural language processing, is able to extract pertinent information from voice requests and transform recordings into text. From these voice requests, she is also able to extract information to formulate a text response, and then voice that response to the user. With machine learning added to the mix, Alexa is able to learn with each request, and her underlying models get better over time. This is why, for example, an Alexa user with a strong accent is more easily understood the more they use the device.

In addition to the A.I. exposition of the workshop, Lauretti also clarified a few common, yet incorrect assumptions about the Amazon Alexa. For one, the Alexa cannot be purchased from Amazon, unlike their Echo and Dot smart speakers. Why? Unlike Amazon’s physical hardware, the Alexa is a cloud-based voice service that connects to all Amazon smart devices and some third-party devices. As such, while Alexa is a complement to Amazon’s smart speakers, she is also the overarching artificial intelligence framework that keeps every device responsive. When Alexa is asked something through a smart speaker, for example, a recording of the request is sent to the Amazon cloud for processing and analysis. Then, once the meaning of the message is predicted, Alexa gives you her best response.

In addition to the A.I. exposition of the workshop, Lauretti also clarified a few common, yet incorrect assumptions about the Amazon Alexa. For one, the Alexa cannot be purchased from Amazon, unlike their Echo and Dot smart speakers. Why? Unlike Amazon’s physical hardware, the Alexa is a cloud-based voice service that connects to all Amazon smart devices and some third-party devices. As such, while Alexa is a complement to Amazon’s smart speakers, she is also the overarching artificial intelligence framework that keeps every device responsive. When Alexa is asked something through a smart speaker, for example, a recording of the request is sent to the Amazon cloud for processing and analysis. Then, once the meaning of the message is predicted, Alexa gives you her best response.

As Alexa is a speech and natural language processor, developing apps for her is restricted to her ability to “hear” and to “speak.” As such, for Alexa skills, they have to be built so that they are “voice first,” or able to be used with just vocal input. This can be a weakness in some cases, especially for developers who want to create Alexa skills that may require the user to divulge sensitive information out loud. In Lauretti’s experience, the best way to develop an Alexa skill is to have it be “something that’s fun for you to do.” This can range from Disney trivia to teaching Alexa how to help users figure out whether or not they should order pizza or wings for the night, skills Lauretti has created in the past. Skill building can also be practical for businesses, and many businesses are currently building their own Alexa skills to make the customer experience better. Yet, like with speaking with a friend, Alexa responses should always include natural contractions and pauses, utilize follow-up questions, and have variation like “Sure!” and “Got it!”.

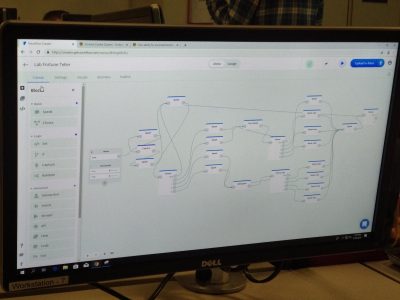

Before utilizing Voiceflow, Lauretti had attendees create paper fortune tellers (shown to the right). The point of the exercise was to show how Alexa can only process voice information, and cannot utilize any other “senses” in order to make a decision. As such, while paper fortune tellers require you to pull, fold, unfold, and process visual information, Alexa cannot do that with her limitations; she can only be programmed to listen and respond. Thus, when attendees began to use Voiceflow, which allows you to chain commands and responses together in a circular decision-tree-like diagram, all of the different blocks available for use on the graphical interface involved either speaking or listening for information. For example, in emulating a paper fortune teller game, a “Speak” block can be created with directions on which color to pick so Alexa can voice them to the user. Then, that “Speak” block can be chained to a “Choice” block outlining all of the different choices to search for in the voice input (blue, red, yellow, or green, for example). If the user does not pick a color on that list, the “Choice” block can be linked back to the “Speak” block through an “else” option so Alexa can clarify the different color choices, again. As can be inferred, an Alexa skill diagram utilizing Voiceflow can become very involved very quickly. However, even if the visual model can become fairly complex, it is very simple to build.

Before utilizing Voiceflow, Lauretti had attendees create paper fortune tellers (shown to the right). The point of the exercise was to show how Alexa can only process voice information, and cannot utilize any other “senses” in order to make a decision. As such, while paper fortune tellers require you to pull, fold, unfold, and process visual information, Alexa cannot do that with her limitations; she can only be programmed to listen and respond. Thus, when attendees began to use Voiceflow, which allows you to chain commands and responses together in a circular decision-tree-like diagram, all of the different blocks available for use on the graphical interface involved either speaking or listening for information. For example, in emulating a paper fortune teller game, a “Speak” block can be created with directions on which color to pick so Alexa can voice them to the user. Then, that “Speak” block can be chained to a “Choice” block outlining all of the different choices to search for in the voice input (blue, red, yellow, or green, for example). If the user does not pick a color on that list, the “Choice” block can be linked back to the “Speak” block through an “else” option so Alexa can clarify the different color choices, again. As can be inferred, an Alexa skill diagram utilizing Voiceflow can become very involved very quickly. However, even if the visual model can become fairly complex, it is very simple to build.

After the Alexa Skill-Building Workshop, many students left with a greater understanding of the Alexa service and Alexa skills. “I didn’t realize how simple it was to make skills for your Alexa,” said Calvin Mahlstedt (MIS ’19) in reference to the Voiceflow interface (shown on the left). As for Joanne Cheong (MIS ’20), she was amazed by the many uses Alexa skills can have. “They can be used for various things like controlling smart home devices, providing quick information from the web, and challenging users with puzzles or games,” she said. Robert McClardy was impressed with the security of Amazon Alexa products: “They’re not as huge a vulnerability in comparison to the number of other means that data can be compromised.”

After the Alexa Skill-Building Workshop, many students left with a greater understanding of the Alexa service and Alexa skills. “I didn’t realize how simple it was to make skills for your Alexa,” said Calvin Mahlstedt (MIS ’19) in reference to the Voiceflow interface (shown on the left). As for Joanne Cheong (MIS ’20), she was amazed by the many uses Alexa skills can have. “They can be used for various things like controlling smart home devices, providing quick information from the web, and challenging users with puzzles or games,” she said. Robert McClardy was impressed with the security of Amazon Alexa products: “They’re not as huge a vulnerability in comparison to the number of other means that data can be compromised.”

For those of you interested in learning more about Voiceflow and Alexa skill building, OPIM Innovate has tech kits available for you to explore, including a tutorial on how to make the voice-enabled fortune teller game featured in the workshop. For those who want a more code-oriented skill-building experience, consider downloading the free Alexa Skills Kit which includes tools, documentation, and code samples for exploration.

Thank you to all of those who attended the Alexa Skill-Building Workshop! We hope to see you in future workshops!